Possibly the first statistic students encounter is the mean. In a statistics course, they soon discover its partner, the standard deviation. At the same time, students will be introduced to the median and to its partner, the interquartile range.

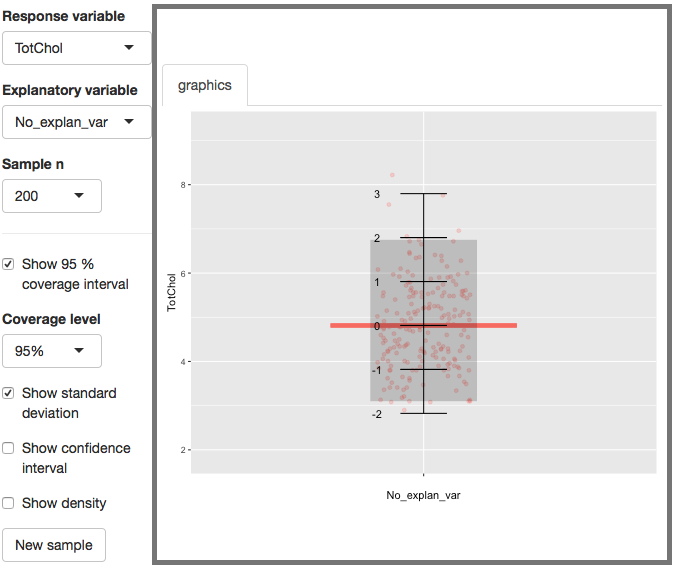

The Little App on center-and-spread is designed to help students see how each of these relate to data. It can also help illuminate some other matters: how good is the normal distribution as an approximation to a variable, skewness, the difference between a coverage range of the data and a confidence interval of a statistic calculated from the data.

Orientation to the app

As is usually the case with Little Apps, graphics use the response vs explanatory variable convention. For this app, the response is quantitative, since “center” and “spread” refer only to quantitative variables. There is a choice of several possible discrete explanatory variables, but for the apps main purposes you can use the default of no explanatory variable. Varying the sample size will be an important component of many lessons.

The data are always shown in the form of a jittered scatterplot. You can choose to display three different statistical annotations on top of the data:

- The mean

- The standard deviation

- The median along with a central interval covering a specified fraction of the data, 95% by default.

There is also a control to show the 95% confidence interval of the mean, standard deviation, and median. These will only be displayed if the corresponding statistical annotation is also being shown.

Finally, there is a control to turn on a display of the density distribution of the sample.

Teaching with the app

This app is intended to be used for several different lessons, spread out over the term. I’ll describe them in a typical chronological order.

The mean and standard deviation

Pick whatever response variable you like. Start with no explanatory variable.

Orient students to the jitter plot, making clear to them that the response variable is on the vertical axis. Ask them to guess what the mean is. Then display the mean. Doing this several times can help students to develop a better intuition.

Point out that the mean is a quantity. Read off the quantity from the vertical axis, making sure to include the physical units. For instance, the mean age is about 37 years. The mean pulse is about 75 beats per minute. For your reference here are some of the units:

- Blood pressure (BPSys1): millimeters of mercury

- Total Cholesterol (TotChol): milli-moles per liter

- Urine flow: milliliters per minute

- Testosterone: nanograms per deciliter

Show that the explanatory variable divides things up into groups, and each group can have its own mean. Now, back to the undifferentiated data, that is, no explanatory variable. (The only reasons for this are to simplify the graphics and to avoid having to talk about the mean and standard deviation when more than one is displayed at the same time.)

Choose one of the data points. Ask your students to tell you how far that point is from the mean. (They can read this off the y-axis. Make sure they include the physical units.) Some points are further from the mean than others. Have your students eyeball how far a typical point is from the mean. This is a quantity with physical units, e.g. about 20 years for Age or 10 beats per minute for pulse, and so on. In stats, rather than talking about “how far” and “distance,” we speak of deviation from the mean. That’s just vocabulary, but it’s an important word to know.

Statisticians have standardized on a way of measuring the typical deviation of the data from the mean. This is called the standard deviation. Just like the answer to the “how far?” question, the standard deviation is a quantity and has units.

The mean and the standard deviation are both quantities and have the same physical units. But they are used for different purposes. The mean refers to a position in the data: where is the center. The standard deviation, on the other hand, is a unit of measurement. If you lay down a ruler on top of the data to measure distances, in statistics that ruler would have marks separated by a distance of one standard deviation.

Turn on the display of standard deviation. It’s shown as a ruler. Translate from the ruler to the y-axis to read off the standard deviation as a quantity, e.g. 20 years for Age and so on.

You can measure each data point on this ruler to see how far it is from the mean. Do this for several points. The vocabulary to describe this distance is the z-score, although physicists, engineers, and some others call it sigmas.

Why introduce standard deviation when we can just as well measure distance in the physical units of the variable? It turns out to be a scale we can use regardless of the variable being described or the units of that variable. (For instance, beats per minute or beats per hour could both be used to measure pulse.)

Show this by changing to different response variables. For each one, translate the ruler to the y-axis to read off the quantity (and its units). Do this for several very different variables.

When you’re done, point out that even though the variables and units were changing, the standard deviation ruler is pretty much fixed in the display. That’s why we use it: it’s nice to have one ruler for measuring anything that comes up.

Note that the standard deviation stays pretty much the same regardless of sample size n. Demonstrate this by increasing the sample size to very large values.

Coverage and the median

This lesson is much like the one about means and standard deviations, but here you’re going to be using the median and coverage range.

A range refers to the region between two values. For example, we can speak of the interval between 20 and 35 years. A coverage range is an interval constructed to cover a given percentage of the data. For instance, the 95% coverage range covers 95% of the data points.

Turn on the coverage range. By convention, we use central coverage ranges. For instance, the 95% coverage range leaves out 2.5% of the data at the high end, and another 2.5% at the low end. A 50% coverage range encompasses half of the data, with a quarter left out at the high end and another quarter at the low end.

Because the median is at the center of the data points, the median is always inside a central coverage range.

Show intervals of various coverage levels for various variables. For some of them, at least, translate the interval to the y-axis to read off the endpoints as physical quantities, e.g. 15 years to 47 years.

Note that the coverage range stays pretty much the same regardless of sample size n. Demonstrate this by increasing the sample size to very large values. (You might have noticed that this very same sentence ended the previous section.)

Median, mean, and skewness

For variables with a symmetrical distribution, the mean is pretty close to the median. (How close? We could use the standard deviation to measure this.) Some of the variables have distributions that are skew or otherwise not symmetrical. For these, the mean and median can differ substantially. Tell your students to set the sample size large, and look to see if they can find any response variable for which the median is more than 1 standard deviation away from the mean.

Coverage and the standard deviation.

In this lesson, display both the coverage range and the standard deviation ruler. You’re going to be showing that the coverage range can be approximated using the standard deviation ruler. That is,

- The 95% coverage range is generally close to the interval between -2 and 2 standard deviations.

- The 67% coverage range is close to the interval between -1 and 1 standard deviation.

- The 50% coverage range generally falls inside the -1 to 1 standard deviation range.

- The 99% coverage range runs from about -2.5 to 2.5 standard deviations.

Write down these approximations on the board. Then try this out for several different response variables. For each variable, go systematically from 50% to 67% to 95% to 99% and ask which of the approximations holds for each coverage level. When you’re at 95% or 99%, you can count how many data points fall outside of the interval. Turn this into a fraction by dividing the count by the sample size n.

The approximation will be a little better for large sample sizes than small ones. But you’ll see that some variables are a much better match than others. The variables that match the best tend to have distributions that are pretty symmetric. (You can check the show-density box to see a depiction of the distribution.)

At the very end of the response-variable list you’ll find variables labelled idealized normal and idealized uniform. These aren’t part of the NHANES data: they are random numbers generated on the computer to match a normal and uniform distribution respectively. By turning up the sample size, you’ll see how good the correspondence between coverage range and the standard deviation ruler can be.

The precision of stats

When you calculate a statistic, it’s helpful to know how precisely you know that quantity. In this center-and-spread app you have the mean, median, and standard deviation. Check the “show 95% confidence intervals” box to show the precision of any of the stats that are being displayed. (Probably you want to do one at a time to keep things from getting too busy.) The particular measure of precision being shown is the 95% confidence interval.

In this lesson, the main point is to show that the length of the confidence interval depends strongly on the sample size n. Compare the length of the confidence interval for n of 50 and 200, 200 and 1000, 500 and 2000, and so on. Since the length of the confidence interval scales with 1/√n, the n = 50 interval should be about twice as long as the n=200 interval.

Remind students that a standard deviation is a nice measure of how far a data point is from the mean. The confidence interval isn’t about an individual data point, but about a statistic calculated from n data points. We’ll use the confidence interval also as a kind of ruler, but instead of measuring the distance between data points it measures a kind of “plausibility distance” between statistics calculated on two groups. If the plausibility distance is small, we don’t have any good reason to believe that the two groups are different in terms of the statistic.